Understanding MCP Server Architecture in Multi-Agent AI Systems

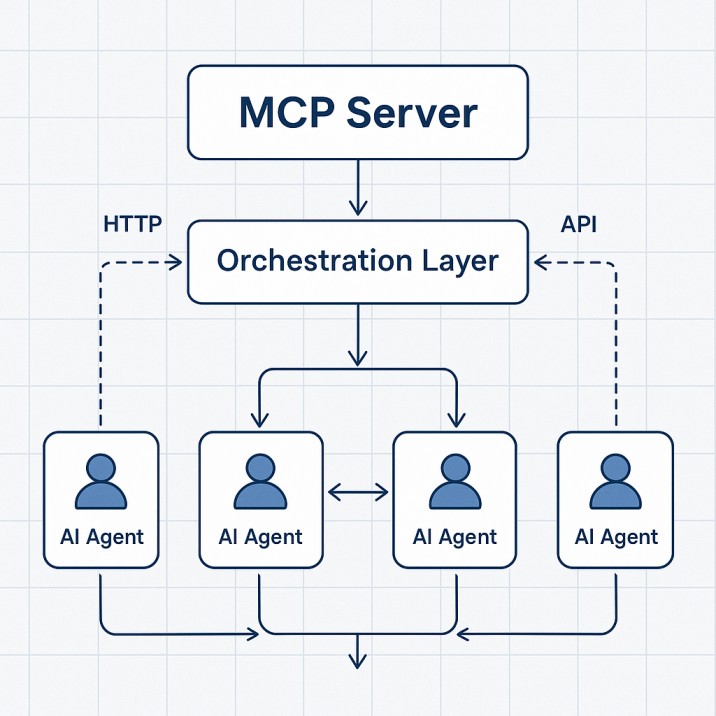

The Model Context Protocol (MCP) server represents a revolutionary approach to building scalable multi-agent AI systems that can handle complex, distributed workloads while maintaining efficiency and reliability. As organizations increasingly deploy AI agents across their infrastructure, understanding MCP server architecture becomes crucial for engineers and technical leaders who want to build robust, production-ready systems.

MCP servers serve as the backbone for coordinating multiple AI agents, managing their interactions, and ensuring seamless communication between different components of a distributed AI system. Unlike traditional monolithic AI applications, MCP-based architectures enable organizations to deploy specialized agents that can work together to solve complex problems while maintaining clear separation of concerns and scalability.

The Evolution of Multi-Agent AI Systems

The journey from single-agent AI applications to sophisticated multi-agent systems represents a fundamental shift in how we approach AI development. Traditional AI systems operated in isolation, processing inputs and generating outputs without collaboration or coordination with other AI entities. However, as business requirements became more complex and AI capabilities expanded, the need for collaborative AI systems became apparent.

Multi-agent AI systems emerged as a solution to handle tasks that require diverse expertise, parallel processing, and coordinated decision-making. These systems can break down complex problems into manageable components, assign specialized agents to handle specific aspects, and integrate results to produce comprehensive solutions. The MCP server architecture provides the infrastructure needed to make this coordination possible at scale.

Core Components of MCP Server Infrastructure

Agent Registry and Discovery

The agent registry serves as the central directory for all AI agents within the MCP ecosystem. This component maintains detailed information about each agent's capabilities, current status, resource requirements, and availability. The registry enables dynamic agent discovery, allowing new agents to join the system seamlessly and existing agents to locate suitable collaborators for specific tasks.

The discovery mechanism works through a sophisticated matching algorithm that considers agent capabilities, current workload, and task requirements. When a new task arrives, the MCP server queries the registry to identify the most suitable agents for the job, taking into account factors such as processing capacity, domain expertise, and current availability.

Communication Protocol Layer

The communication protocol layer handles all inter-agent communication within the MCP system. This layer abstracts the complexity of distributed communication, providing a standardized interface for agents to exchange information, coordinate actions, and share results. The protocol supports both synchronous and asynchronous communication patterns, allowing agents to operate efficiently regardless of their processing speeds or availability windows.

Message routing, delivery guarantees, and error handling are all managed at this layer, ensuring reliable communication even in the presence of network issues or agent failures. The protocol also includes built-in support for message prioritization, allowing critical communications to take precedence over routine updates.

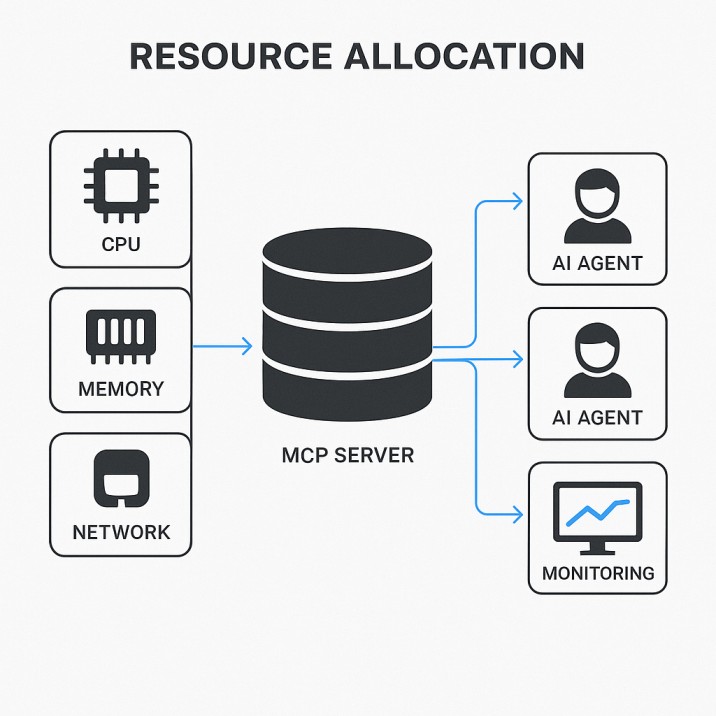

Resource Management and Allocation

Effective resource management is crucial for maintaining performance and preventing bottlenecks in multi-agent systems. The MCP server includes sophisticated resource allocation mechanisms that monitor system resources, predict demand patterns, and automatically adjust allocation based on current needs and historical usage patterns.

The resource management system tracks computational resources, memory usage, network bandwidth, and storage requirements for each agent. It can dynamically scale resources up or down based on demand, ensuring optimal performance while minimizing waste. This capability is particularly important for organizations deploying vertical AI agents that may have varying resource requirements throughout their operational cycles.

Scalability Patterns and Implementation Strategies

Horizontal Scaling Architecture

Horizontal scaling represents the most effective approach for handling increased demand in multi-agent AI systems. MCP servers are designed to support horizontal scaling through a distributed architecture that can span multiple servers, data centers, or cloud regions. This approach allows organizations to add capacity by deploying additional MCP server instances rather than upgrading existing hardware.

The horizontal scaling architecture includes built-in load balancing, automatic failover, and cross-region replication capabilities. When demand increases, new MCP server instances can be deployed automatically, and existing agents can be redistributed across the expanded infrastructure. This ensures consistent performance even during peak usage periods.

Agent Clustering and Load Distribution

Agent clustering involves grouping related agents together to optimize performance and reduce communication overhead. The MCP server automatically identifies agents that frequently collaborate and places them in the same cluster, minimizing network latency and improving response times. This clustering approach is particularly beneficial for custom-built AI agents that require close coordination.

Load distribution algorithms ensure that work is evenly distributed across available agents, preventing any single agent from becoming a bottleneck. The system considers factors such as agent capabilities, current workload, and historical performance when making distribution decisions, ensuring optimal utilization of available resources.

Fault Tolerance and Recovery Mechanisms

Building fault tolerance into MCP server architecture is essential for maintaining system reliability in production environments. The system includes multiple layers of fault tolerance, from individual agent monitoring to full system backup and recovery capabilities.

Agent health monitoring continuously tracks the status of each agent, detecting failures or performance degradation before they impact system operations. When an agent fails, the system can automatically restart it, migrate its workload to other available agents, or deploy replacement agents as needed. This approach ensures continuous operation even in the presence of hardware failures or software issues.

Performance Optimization Techniques

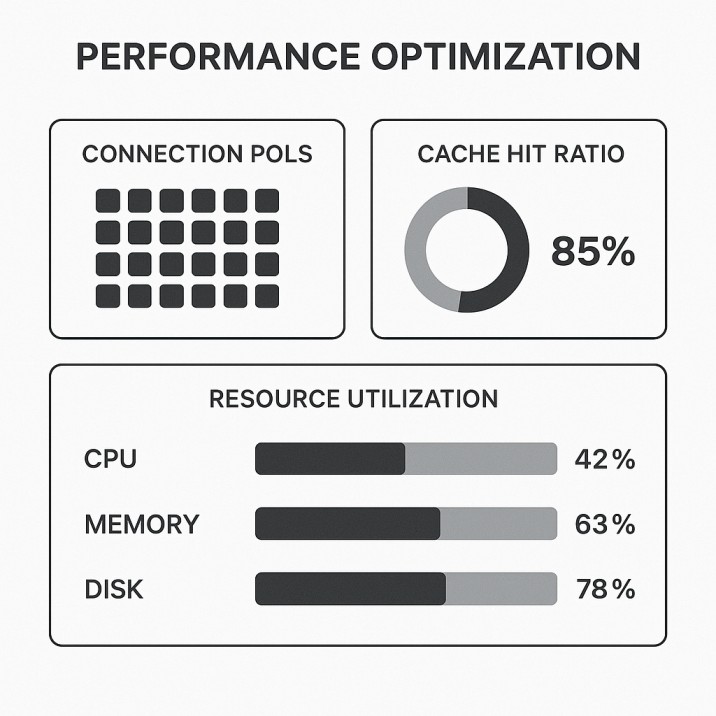

Caching and Data Management

Effective caching strategies are crucial for maintaining high performance in multi-agent systems. The MCP server implements multi-level caching that includes agent-level caches, shared caches for frequently accessed data, and distributed caches that span multiple server instances. This caching hierarchy reduces redundant processing and minimizes data transfer between agents.

Data management policies ensure that cached data remains consistent and up-to-date across all agents. The system includes intelligent cache invalidation mechanisms that automatically update cached data when underlying sources change, preventing agents from making decisions based on stale information.

Connection Pooling and Resource Optimization

Connection pooling reduces the overhead associated with establishing and maintaining connections between agents and external services. The MCP server maintains pools of pre-established connections that can be reused by multiple agents, significantly reducing connection establishment time and resource consumption.

Resource optimization techniques include dynamic resource allocation based on real-time demand, intelligent scheduling that considers agent processing patterns, and automatic garbage collection that frees up unused resources. These optimizations ensure that system resources are used efficiently while maintaining high performance.

Real-World Implementation Patterns

Enterprise Integration Scenarios

Large enterprises typically deploy MCP servers to coordinate multiple specialized AI agents across different departments and business functions. Common implementation patterns include customer service automation, where multiple agents handle different aspects of customer inquiries, and supply chain optimization, where agents monitor various supply chain components and coordinate responses to disruptions.

Integration with existing enterprise systems requires careful consideration of security, compliance, and performance requirements. MCP servers can be deployed in hybrid configurations that combine on-premises infrastructure with cloud resources, providing flexibility while maintaining control over sensitive data and processes.

Cloud-Native Deployment Models

Cloud-native deployments leverage containerization, microservices architecture, and cloud-specific services to maximize scalability and flexibility. MCP servers can be deployed using container orchestration platforms like Kubernetes, enabling automatic scaling, rolling updates, and simplified management of complex multi-agent systems.

Cloud deployment models also enable organizations to take advantage of managed services for databases, message queues, and monitoring, reducing operational overhead while maintaining high availability and performance. This approach is particularly beneficial for organizations implementing automation workflows that require reliable, scalable infrastructure.

Edge Computing Integration

Edge computing scenarios present unique challenges for multi-agent AI systems, including limited computational resources, intermittent connectivity, and latency requirements. MCP servers can be deployed in edge environments using lightweight configurations that prioritize essential functionality while maintaining coordination capabilities.

Edge deployments often involve hierarchical architectures where edge MCP servers coordinate local agents while maintaining connections to central MCP servers for system-wide coordination and updates. This approach enables organizations to deploy AI agents closer to data sources and users while maintaining centralized control and monitoring.

Security and Compliance Considerations

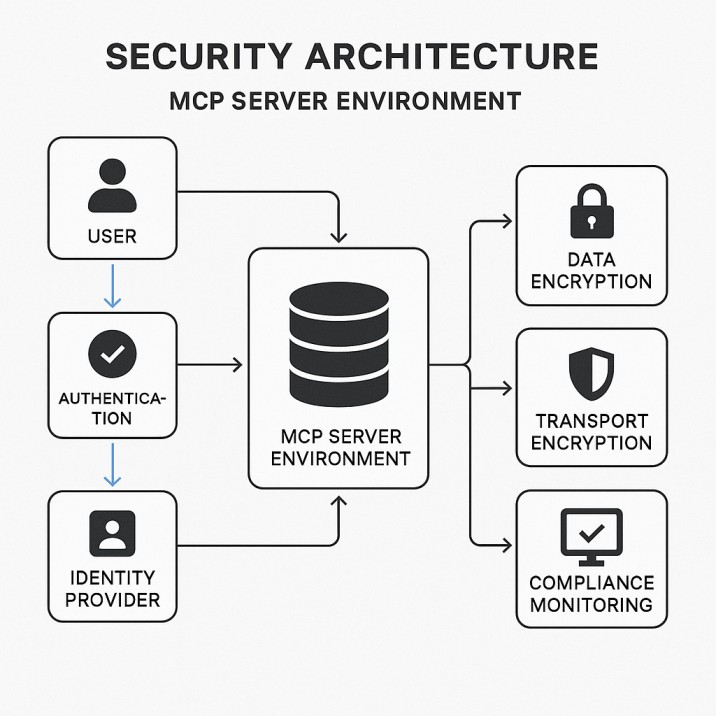

Authentication and Authorization

Security in multi-agent systems requires robust authentication and authorization mechanisms that can handle the complex interactions between multiple AI agents. The MCP server implements role-based access control (RBAC) that defines specific permissions for each agent based on its function and security requirements.

Authentication mechanisms include support for various identity providers, certificate-based authentication, and API key management. The system can integrate with existing enterprise identity management systems, ensuring consistent security policies across all AI agents and supporting compliance with organizational security standards.

Data Protection and Privacy

Data protection in multi-agent systems involves securing data both at rest and in transit, implementing encryption for sensitive information, and ensuring compliance with privacy regulations. The MCP server includes built-in encryption capabilities that protect inter-agent communications and stored data without impacting system performance.

Privacy protection mechanisms include data anonymization, access logging, and audit trails that track all data access and modifications. These capabilities are essential for organizations deploying AI agents that handle sensitive customer information or operate in regulated industries.

Compliance and Audit Requirements

Compliance requirements vary by industry and jurisdiction, but common requirements include audit logging, data retention policies, and regulatory reporting capabilities. The MCP server provides comprehensive logging and monitoring capabilities that can be configured to meet specific compliance requirements.

Audit trails track all system activities, including agent deployments, configuration changes, and data access patterns. This information can be used to demonstrate compliance with regulatory requirements and investigate security incidents or system anomalies.

Monitoring and Observability

Performance Metrics and KPIs

Effective monitoring of multi-agent AI systems requires tracking a wide range of performance metrics and key performance indicators (KPIs). The MCP server provides comprehensive monitoring capabilities that track system performance, agent health, and business-level metrics.

Performance metrics include response times, throughput, error rates, and resource utilization across all system components. Business-level KPIs might include task completion rates, accuracy metrics, and cost per transaction, providing insights into the overall effectiveness of the multi-agent system.

Distributed Tracing and Debugging

Distributed tracing provides visibility into the flow of requests through complex multi-agent systems, enabling developers to identify performance bottlenecks and debug issues that span multiple agents. The MCP server includes built-in distributed tracing capabilities that track requests as they move through the system.

Debugging capabilities include real-time log aggregation, error correlation, and performance profiling tools that help developers identify and resolve issues quickly. These tools are essential for maintaining system reliability and performance in production environments.

Alerting and Incident Response

Proactive alerting capabilities help operations teams identify and respond to issues before they impact system performance or availability. The MCP server includes configurable alerting rules that can trigger notifications based on various conditions, including performance thresholds, error rates, and system resource usage.

Incident response capabilities include automated remediation actions, escalation procedures, and integration with existing incident management systems. These capabilities ensure that issues are addressed quickly and effectively, minimizing impact on system operations.

Future Trends and Emerging Technologies

AI-Driven System Optimization

The future of MCP server architecture includes AI-driven optimization capabilities that can automatically tune system parameters, predict resource needs, and optimize agent placement based on usage patterns and performance history. These capabilities will enable self-managing multi-agent systems that can adapt to changing conditions without human intervention.

Machine learning algorithms will be integrated into the MCP server to provide predictive analytics, anomaly detection, and automated optimization. These capabilities will help organizations maximize the efficiency and effectiveness of their multi-agent AI systems while reducing operational overhead.

Integration with Emerging AI Technologies

As new AI technologies emerge, MCP servers will need to adapt to support new types of agents and capabilities. This includes integration with advanced AI frameworks, support for new AI models, and capabilities for handling emerging use cases.

The modular architecture of MCP servers makes them well-suited for integrating new technologies while maintaining compatibility with existing agents and systems. This flexibility ensures that organizations can adopt new AI capabilities without requiring complete system replacements.

Standardization and Interoperability

The development of industry standards for multi-agent AI systems will drive improvements in interoperability and portability. MCP servers are designed to support standard protocols and interfaces, enabling integration with third-party systems and migration between different deployment environments.

Standardization efforts will also focus on security, compliance, and performance benchmarks, helping organizations evaluate and compare different multi-agent AI solutions. This standardization will accelerate adoption and reduce the complexity of implementing multi-agent systems.

Best Practices for MCP Server Deployment

Planning and Architecture Design

Successful MCP server deployment requires careful planning and architecture design that considers current requirements, future growth, and integration needs. Organizations should start with a clear understanding of their use cases, performance requirements, and scalability goals.

Architecture design should include considerations for data flow, security requirements, compliance needs, and integration points with existing systems. A well-designed architecture will provide the foundation for scalable, maintainable multi-agent AI systems that can evolve with changing business requirements.

Testing and Validation Strategies

Comprehensive testing is essential for ensuring the reliability and performance of multi-agent AI systems. Testing strategies should include unit testing for individual agents, integration testing for agent interactions, and system-level testing for overall performance and scalability.

Validation strategies should verify that the system meets functional requirements, performance targets, and security standards. This includes testing failure scenarios, recovery procedures, and scalability limits to ensure that the system can handle production workloads reliably.

Deployment and Operations Management

Effective deployment and operations management requires establishing clear procedures for system deployment, configuration management, monitoring, and maintenance. Organizations should implement automation tools and processes that reduce manual effort and minimize the risk of errors.

Operations management should include regular performance reviews, capacity planning, and system optimization activities. This ongoing management ensures that the multi-agent AI system continues to meet performance requirements and business objectives as it scales and evolves.

Conclusion: Building the Future of Scalable AI

MCP server architecture represents a fundamental advancement in building scalable multi-agent AI systems that can handle complex, distributed workloads while maintaining efficiency and reliability. As organizations increasingly rely on AI agents to automate processes, make decisions, and provide services, the importance of robust, scalable infrastructure becomes critical.

The key to success with MCP servers lies in understanding the core architectural principles, implementing appropriate scalability patterns, and following best practices for deployment and operations. Organizations that invest in building solid MCP server foundations will be well-positioned to take advantage of emerging AI technologies and scale their AI capabilities as business requirements evolve.

The future of multi-agent AI systems depends on continued innovation in infrastructure, protocols, and management tools. MCP servers provide the foundation for this evolution, enabling organizations to build sophisticated AI systems that can adapt, scale, and perform reliably in production environments.

As the field continues to evolve, organizations should focus on building flexible, scalable architectures that can accommodate new technologies while maintaining compatibility with existing systems. The investment in MCP server infrastructure today will enable organizations to realize the full potential of multi-agent AI systems in the future.

For organizations ready to explore the possibilities of multi-agent AI systems, the MCP server architecture provides a proven path to scalable, reliable deployments that can grow with business needs and technological advancement. The combination of robust infrastructure, comprehensive monitoring, and best practice implementation creates the foundation for successful AI agent deployment at scale.

Comments

Loading comments...